Search engine optimization is an ever-evolving field, and JavaScript SEO is no exception.

With the rise of dynamic web pages, it has become increasingly important to optimise sites written in JavaScript for search engines. That’s why we’ve put together this definitive guide.

Here you’ll find all the information you need on how to properly configure your website so that it gets indexed and can be found easily by users searching online.

So whether you’re a web developer looking for tips on optimising your website or just someone curious about what Javascript SEO entails, this guide will provide everything you need to know!

What is Javascript SEO?

It is a branch of SEO focused solely on optimising websites built with JavaScript for search engine visibility.

The goal is to make sure that these websites are crawled and indexed correctly by search engines, making them easier to find on the web for users.

So, what is JavaScript?

It is a programming language used to create interactive websites and web applications.

JavaScript is increasingly used for dynamic web pages, which require more complex elements than traditional HTML/CSS websites.

For example, it is used for creating menus, forms, notifications, and pop-up windows.

This means that optimising these websites for search engine visibility requires a special set of techniques and practices.

Is JavaScript Good or Bad for SEO?

The answer is that it depends.

As long as JavaScript is properly implemented and configured for search engine visibility, it can be beneficial for SEO.

In fact, some of the most popular websites you are probably visiting every day are built using this programming language.

Think of sites like Facebook, Twitter, or Instagram. They all rely heavily on JavaScript for functionality and interactivity, yet are still easily found in search engine results.

However, if the JavaScript is not properly implemented or configured for SEO visibility, it can actually hinder your website’s performance in the SERPs.

How Does JavaScript Affect SEO?

JavaScript affects SEO in two main ways.

First, it can cause search engine crawlers to be unable to access the content on your website.

Second, it can cause the rendered content to be different from what is actually on the pages.

This means that search engine crawlers may not be able to detect the content on your website and thus, will not be able to index it properly.

In other words, your website may not show up in search engine results or be as visible as it could be.

In particular, JavaScript has the potential to affect a number of on-page elements and ranking factors that are essential for SEO success, including:

- Rendered Content

- Links

- Lazy-Loaded Images

- Page Load times

- Metadata

Rendered Content

It is important to ensure that search engine bots are able to access the same content that is visible to users in their browsers.

This means that any content rendered by JavaScript should be easily accessible and indexable by search engine crawlers.

If the content rendered by JavaScript is not accessible, they will not be able to index it.

This can lead to your website not appearing in search engine results, or even worse, being penalised by Google.

Links

It is crucial to make sure that JavaScript-generated links are accessible to search engine crawlers.

For example, if a JavaScript button is used to open a pop-up window, then the link should be accessible to bots.

Otherwise, they will not be able to follow the links and index the content on your website.

Lazy-Loaded Images

It is also important to make sure that images loaded with JavaScript are properly indexed by search engine crawlers.

If an image is loaded with JavaScript, it should include a standard <img> tag accessible to search engine crawlers.

Otherwise, the images will not be indexed and your website could suffer from poor visibility in the SERPs.

Page Load Times

JavaScript can also affect page load times, as it requires extra time to be executed.

Search engine crawlers take page load times into account when ranking websites, so it is important to make sure that your website’s JavaScript is optimised for fast page loads.

Metadata

Finally, metadata such as titles and meta descriptions are often rendered through JavaScript.

It is important to ensure that these elements are properly rendered for search engines, as they are essential for SEO.

Which Site Elements Are Generated by Javascript?

In order to ensure that your JavaScript is properly configured for SEO, it is important to understand which site elements are generated by this programming language.

Some of the most common Javascript-generated site elements include:

- Pagination

- Internal links

- Top products

- Reviews

- Comments

Pagination

Pagination allows users to quickly navigate through a website’s content.

It is commonly used in e-commerce stores, news websites, and other sites that contain multiple pages of content.

In order for search engine crawlers to properly detect paginated content, the links must be accessible via standard HTML.

Internal Links

Internal links are used to link between different pages on a website.

Search engine bots need to be able to detect these links in order for them to properly index the content of your website.

Therefore, it is important to make sure that your internal links are accessible via standard HTML.

Top Products

Many websites use JavaScript to display their top products or services.

These elements must be accessible via standard HTML in order for them to be properly indexed by search engines.

Reviews

Reviews are another important element that is often rendered through JavaScript.

Search engine crawlers need to be able to detect reviews in order for them to be properly indexed.

Therefore, it is important to make sure that your reviews are accessible via standard HTML.

Comments

Finally, many websites use JavaScript to render comments left by users.

Search engine crawlers must be able to detect these comments via HTML in order for them to be properly indexed.

How Google Processes JavaScript

Since it can potentially affect SEO in many ways, it is important to know how Google processes JavaScript.

Google uses a combination of its own rendering engine, as well as open-source versions of Chrome and Safari to render websites.

And it is important to remember that Googlebot is still a crawler, and is not able to execute JavaScript code.

Therefore, it is essential that all content that needs to be indexed by Google is available in the initial HTML response.

This means that all content rendered by JavaScript must be accessible to Googlebot, otherwise, it will not be indexed.

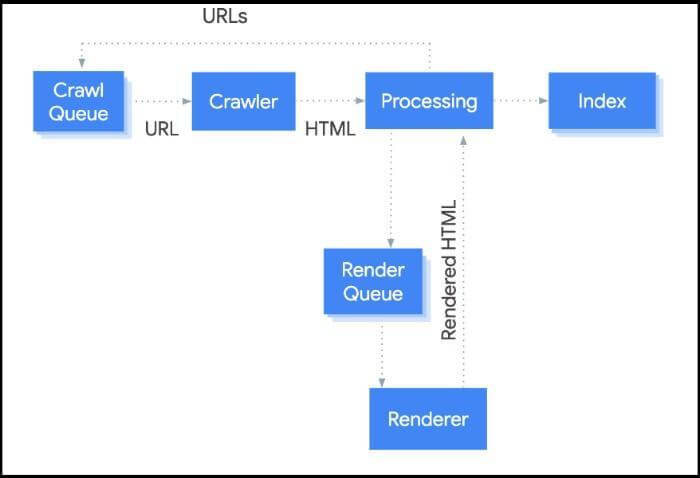

In short, Google divides the processing of JavaScript web apps into three distinct stages:

- Crawling

- Processing

- Indexing

1. Crawling

The crawler sends GET queries to the server and receives a response containing headers as well as the content of the requested file, which is then stored for later use.

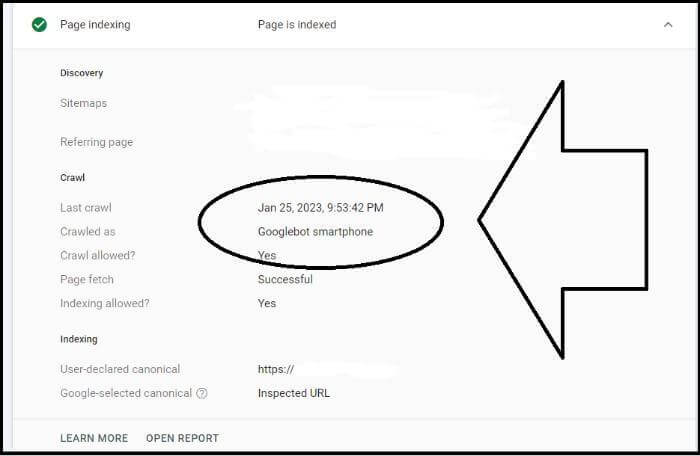

Today, Google primarily uses mobile-first indexing for its requests.

Should you wish to check how your site is being crawled by the search engine giant, simply use their URL Inspection Tool within Search Console and compare against the Coverage information.

Here, you can check whether or not your site has switched over to mobile-first indexing (Googlebot smartphone) with the “Crawled as” data.

There could be some cases where websites may detect the user-agent and then present different content to a crawler. It means that Google could see something different than the users.

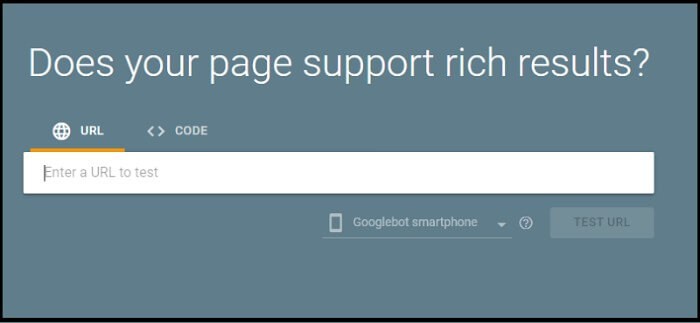

So, it is handy to check tools such as Search Console’s URL Inspection, Mobile-Friendly Test or Rich-Results Test and look for JavaScript SEO issues.

Also, often Google bots have trouble accessing content on certain pages. That’s why you need to be able to test for it, and the Rendering options chapter will show you how!

It is essential to understand that Google not only crawls the output of a page in HTML format but also stores all resources necessary for its construction. This includes Javascript files, CSS, XHR requests, and API endpoints in addition to HTML pages.

2. Processing

The next step is processing the HTML content received by Googlebot.

This includes parsing, DOM tree construction, and rendering before storing a snapshot of the page in the index.

Google has a rendering engine that processes and renders JavaScript code found on pages as closely as possible to how a browser would do it.

It is important to remember that Google only renders the code once, so if your website changes its layout or content after the initial render, Google will not be able to detect the changes.

For this reason, it is essential to make sure that all content and links are available to Googlebot in the initial HTML response.

3. Indexing

The last step is indexing and ranking, where the content is finally indexed and stored in Google’s search index.

Google does not rank JavaScript websites differently from regular HTML sites, however, if the content seen by Googlebot is different from what users see, then Google may penalise the website by lowering its search ranking.

Rendering Options

The most important tip to keep in mind when it comes to JavaScript SEO is that all content must be visible to search engine crawlers.

To do this, there are two main ways of rendering content for Googlebot:

- Server-side

- Client-side

Server-side rendering involves using the server to generate HTML that is then sent to the browser.

This ensures that all content will be visible to crawlers and reduces the need for additional processing by Googlebot.

Client-side rendering, on the other hand, relies on JavaScript code running in the browser to generate HTML.

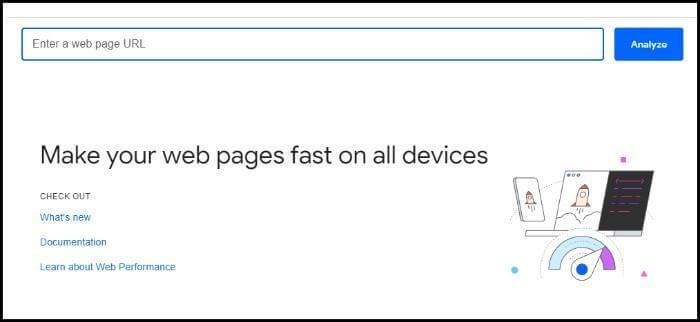

Google has developed two tools to help developers debug and optimise their JavaScript-driven websites.

The first is Google’s PageSpeed Insights Tool, which will run a series of tests on the site and provide information about performance issues that could be affecting SEO efforts.

The second tool is Google Rich Results Test, which can be used to check if Googlebot is able to access and index JavaScript content.

Finally, you can also use the Lighthouse tool in Chrome DevTools to check for SEO issues related to JavaScript.

Common Javascript SEO Errors

It is important to note that some common errors can be made when trying to make JavaScript websites SEO-friendly.

Here are some reasons why Google might not actually ever run your JavaScript code:

- Not having enough crawlable content

- Using AJAX/SPAs without proper URL structures

- Excessive blocking of resources

- Inadequate server response times

- Inadequate caching of dynamic content

Not having enough crawlable content

This error happens when Googlebot can’t find enough content in the HTML response to index.

The best way to fix this is to make sure that all content is immediately visible – either by using server-side rendering, setting up a sitemap, or providing crawlable links in the HTML response.

Using AJAX/SPAs without proper URL structures

When using AJAX and Single Page Applications, it is essential to provide proper URL structures for each page.

If Googlebot encounters a lot of dynamic URLs that are hard to crawl, then it may not be able to index the content properly.

Excessive blocking of resources

Ensure that any important resources such as JavaScript files, images, and stylesheets are not blocked by robots.txt or meta tags, as this will prevent Googlebot from being able to crawl them properly.

Inadequate server response times

It is important to ensure that your server can handle the requests coming from Googlebot in a timely manner.

If the server is too slow then Googlebot may not be able to index the content properly.

Inadequate caching of dynamic content

If you have a lot of dynamic content on your website then it can be beneficial to cache it so that Googlebot does not have to request it every time it crawls the page.

These are just some of the common Javascript SEO errors that can occur on a website.

It is important to be aware of them and take steps to fix them in order to ensure that your website is crawled and indexed correctly.

How to Create a Javascript SEO-Friendly Website

When it comes to Javascript SEO, three primary elements are essential for success:

- Crawlability (ensuring Google can properly and effectively scan your website’s structure)

- Renderability (making sure Google can view the pages on your website accurately)

- Crawl budget (calculating how much time it takes for Google to scour through all of your website’s content)

Without these components in place, you won’t be able to reach optimal search engine rankings.

Now that you understand these important points, let’s move forward to practical things to consider.

Check them all to ensure that your website is optimised to be crawled and indexed by Googlebot:

- On-Page SEO

- Crawling

- URLs

- Sitemap

- Error Pages

- Redirects

- Lazy Loading

- Internationalisation

On-Page SEO

The first step is to make sure that your website’s content is optimised. This includes optimising title tags, meta descriptions, and heading tags. Follow the same rules for on-page SEO.

And remember that JavaScript can be used to alter or modify the meta description, and even the <title> element.

Crawling

It is important to ensure that your website can be crawled and indexed by Googlebot.

This includes checking that the robots.txt file is configured correctly, as well as making sure that any important resources such as JavaScript files, images, and stylesheets are not blocked.

The simplest technique to enable resources necessary for crawling is to include:

User-Agent: Googlebot

Allow: .js

Allow: .css

URLs

If you’re refreshing content, make sure to change the URLs as well.

You know that with JavaScript frameworks there comes a router for clean and navigable URLs – so NO hashes (#) should be used for routing. This is especially accurate when it pertains to earlier versions of Angular or Vue.

For example, if your URL looks like this abc.com/#something then any information after # will not be interpreted by the server (which isn’t what we want).

To fix this problem in particular with Vue sites, work alongside your developer who can adjust it.

Sitemap

Clean URLs can be achieved with the routers of JavaScript frameworks.

To find a router that would facilitate sitemaps, just perform an online search for “<system name> + router sitemap”. For instance, if you’re using the Vue framework, type “Vue router sitemap” in the search engine.

Additionally, many rendering solutions also feature built-in support for generating sitemaps; to locate them use queries like “<system name> +sitemap”.

With these simple searches finding a fitting solution should not be difficult!

Error Pages

JavaScript frameworks are client-side and cannot send a server error like 404 Not Found.

To fix this, you have two alternatives:

- Add a JavaScript redirect to the page that sends back an appropriate status code such as 404;

- Put a noindex tag on the unsuccessful page plus some form of message, e.g., “404 Page Not Found,” which will treat it as soft 404 since its actual status code is 200 OK.

Redirects

301/302 redirects, a server-side method familiar to SEO experts, are still possible even when Javascript is utilised client-side.

Google processes the page by following the redirect and all signals such as PageRank will be passed on.

These can usually be identified in code by searching for “window.location.href” – an invaluable tool that ensures your website remains connected to its original content and optimised accordingly!

Lazy Loading

When dealing with JavaScript frameworks, you can find a module to handle lazy loading almost anywhere. The most popular modules for this task are Lazy and Suspense.

You may opt to lazily load images but be mindful not to do the same with content as it might not get indexed correctly by search engines – though such an action is possible through Javascript.

Internationalisation

For most frameworks, there are a few modules available to provide the features necessary for internationalisation such as hreflang.

These can usually be ported over to any system and typically include options like i18n, intl, or even Helmet – the same module used for header tags – which can also be utilised in adding essential tags.

Javascript SEO FAQ

Q: How to check if a site is built with JavaScript?

A: The easiest way is to inspect the source code of a website and search for “script”. If you see JavaScript script tags, then it means that there are some elements of the website built with this programming language.

Q: Can Googlebot click buttons on a website or scroll?

A: No, Googlebot is not able to click buttons or scroll on a website. It needs to be provided with the direct link that leads to the content you want it to index.

Q: Javascript SEO Vs iFrame SEO?

A: Although there are similarities between the two, they should be handled differently when it comes to SEO. iFrames can be seen by Googlebot, but they are not crawled or indexed as effectively as plain HTML. On the other hand, Javascript can be used to alter and manipulate content that can be indexed by search engines.

Q: Does Google run JavaScript in SEO?

A: Yes, Google has the ability to run JavaScript when it comes to SEO. Googlebot periodically runs a version of Chrome 41 and is able to render and index content that is generated through JavaScript.

Q: Which JavaScript framework is SEO friendly?

A: SEO-friendly JavaScript frameworks include React, Preact, Angular, Vue.js, and more. These frameworks can be used to create SEO-friendly websites that are easily crawled and indexed by search engines.

Q: Is JavaScript as fast as Python?

A: It depends on the use case. JavaScript is generally faster for front-end development, while Python is often better for back-end development and data processing.

Q: Is JavaScript the hardest language to learn?

A: JavaScript is one of the most popular programming languages, so it is not the hardest to learn. However, mastering more advanced concepts such as asynchronous programming may take some time. With enough practice, anyone can learn JavaScript.

Q: What popular companies use JavaScript?

A: Popular companies that use JavaScript include Google, Amazon, Facebook, and Netflix. These companies rely on JavaScript to power their websites and applications.

Q: Is Javascript SEO going to disappear in the future?

A: No, it is not. JavaScript has become an essential part of the web experience and search engines have adapted to indexing content built with this language. It is not going away anytime soon.

Q: Does adding JavaScript hurt SEO?

A: Not necessarily, as long as you are following best practices for optimising your site for search engines. Google is able to crawl and index content created with JavaScript, so there should not be any issues with your SEO. However, it is important to ensure that all of your scripts are loaded properly and don’t interfere with the rendering of your page.

Q: What are the benefits of using JavaScript for SEO?

A: JavaScript can be used to create dynamic content that is easier for search engines to index and rank. Additionally, it can help improve the user experience on your website by making it faster and more interactive. Finally, it can also enable you to track users’ interactions with your website in order to better understand how they are using your site and what content they are engaging with.

Key Takeaways

JavaScript SEO is an essential part of optimising websites for search engine visibility.

Crawling and indexing should be done correctly, including providing proper URL structures and configuring robots.txt and hreflang tags properly.

Additionally, sitemaps and lazy loading can be used to reduce the amount of data that needs to be downloaded by Googlebot.

By understanding these concepts, you can ensure that your website is properly optimised for search engines.

And don’t forget to read Google’s official documentation and troubleshooting guide for the most up-to-date information on SEO and JavaScript.

Happy optimising!