Online platforms are flooded with vast amounts of user-generated content, and artificial intelligence (AI) has become an indispensable ally in the battle against inappropriate material and the enforcement of community guidelines.

However, AI’s role in content moderation and censorship isn’t without controversy. As these algorithms screen and sometimes automatically remove content, questions about accuracy, bias, and the suppression of free speech come to the forefront.

Overview

This article explores how AI is shaping the landscape of digital communication, the challenges it faces, and the balance it must strike between protecting users and preserving freedom of expression.

Here’s a concise overview of what we will discuss:

- Understanding AI in Content Moderation: Definition and primary functions.

- Benefits of AI Moderation: Efficiency, accuracy, and scalability in handling content.

- Challenges and Limitations: Issues such as bias, false positives, and ethical concerns.

- Censorship Implications: The impact of AI on freedom of speech and information.

- Future Directions: Emerging trends and potential advancements in AI moderation technologies.

By the end of this article, you will gain insights into how AI is shaping the landscape of content moderation and the broader implications for society.

Evolution of Content Moderation

Over the years, content moderation has transformed significantly, adapting to the evolving demands of the digital age. Initially, moderation relied heavily on human oversight, where individuals would painstakingly sift through posts and comments to enforce community guidelines. This manual method was not only time-consuming but also inconsistent, with different moderators often applying rules differently.

As the internet grew, so did the volume of content, making manual moderation impractical. You’ve likely noticed shifts towards more automated systems, even before AI became prevalent. These early systems used basic keyword filters to flag potentially harmful content, but they weren’t very sophisticated. They’d often miss nuanced or context-dependent issues, leading to both over- and under-moderation, frustrating users and platform owners alike.

Benefits of AI Moderation

AI moderation significantly enhances the efficiency and consistency of content oversight on digital platforms. You’ll notice that it can process vast amounts of data swiftly, far quicker than human moderators could. This speed means harmful content like hate speech or misinformation is identified and addressed faster, improving overall user experience and safety.

You’re also getting a more uniform application of rules. Whereas human moderators might interpret guidelines differently, AI systems apply the same standards across the board. This reduces bias and ensures fair treatment for all users.

Additionally, AI doesn’t tire like humans, so it can work around the clock, continuously monitoring for inappropriate content. This relentless oversight helps maintain a clean and respectful environment on your favourite platforms.

Challenges Faced by AI

Despite its advantages, AI faces significant challenges in content moderation. You’ll find that AI can’t always grasp nuances like sarcasm, cultural context, or complex language subtleties. These limitations lead to errors in judgment, where harmless content might get flagged or inappropriate material slips through.

Furthermore, AI relies heavily on the data it’s trained on. If this data is biased, you’re looking at skewed AI decisions perpetuating these biases. This affects fairness and accuracy in content moderation, creating a cycle of misinformation or unfair treatment.

Also, AI systems require continuous updates to adapt to evolving language and new forms of communication. Without constant refinement, they become less effective, making your task to maintain a safe online environment increasingly challenging.

Ethical Considerations in AI

You must consider the ethical implications when employing AI for content moderation. As you integrate these systems, it’s crucial to address biases that can infiltrate AI algorithms. These biases could skew AI decisions, unfairly targeting or protecting certain groups. You’ll need to ensure transparency in how decisions are made.

Furthermore, it’s important you respect user privacy. AI tools often analyze vast amounts of personal data to determine content appropriateness. You have to establish strict protocols to protect this data from misuse or unauthorized access.

Lastly, consider the accountability of AI systems. You should have mechanisms in place to challenge and rectify AI decisions, ensuring they’re fair and justifiable, and keeping your users’ trust intact.

Impact on Freedom of Speech

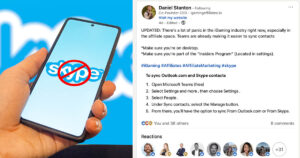

AI-driven content moderation can significantly impact freedom of speech, potentially limiting what you’re able to express online. As these systems scan and filter vast amounts of data, they often rely on algorithms that may not fully understand the context or the nuances of human language. This can lead to the unintended censorship of legitimate content, stifling your ability to participate in meaningful discussions.

You might find your posts or comments wrongly flagged or removed, simply because the AI misinterpreted your words. Moreover, the lack of transparency in how these decisions are made by machines leaves you in the dark, unable to challenge or understand why your expression was restricted.

This raises concerns about how much control you really have over your own words in the digital space.

AI vs Human Moderation

When comparing AI and human content moderation, it’s clear that each approach has its unique strengths and challenges. AI can process vast amounts of data quickly, constantly learning from new patterns, which means you’re less likely to see harmful content slip through. However, it might struggle with context, leading to unnecessary censorship of your posts.

Humans, on the other hand, understand nuance and context better, allowing for more accurate decisions. Yet, they can’t match AI’s speed and are more susceptible to bias based on personal beliefs or experiences.

This mix of speed and understanding in AI, versus insight and potential bias in humans, shapes how effectively your content is moderated.

Future Trends in Censorship

As we look ahead, you’ll notice that advancements in AI technology are set to redefine the boundaries of censorship. You’ll find that as tools get smarter, the strategies to manage digital content will evolve rapidly. The influence of machine learning means that systems can adapt quicker to the nuances of context, slang, and emerging communication trends. This shift will likely see more proactive efforts in content regulation, aiming to anticipate and mitigate harmful content before it spreads widely.

Moreover, you’ll see an increase in personalized censorship, where content filtering becomes more tailored to individual viewing preferences and cultural sensitivities. This could lead to a more segmented internet, where your experience is drastically different from someone else’s based on your digital profile and geographic location.

Balancing AI Efficiency and Accuracy

You’ll find that balancing the efficiency and accuracy of AI in content moderation presents a significant challenge. As you delve deeper, you’ll see that increasing speed often compromises the thoroughness of content analysis. It’s about finding a middle ground where AI can quickly scan vast amounts of data while maintaining a high level of precision in identifying harmful content.

To achieve this, you need to continuously train the AI with diverse datasets, which helps in reducing biases and errors. It’s crucial that you also integrate human oversight to catch nuances that AI might miss.

Remember, the goal isn’t just to moderate content swiftly but also to ensure that the moderation is fair and respects free speech. Balancing these aspects is key to effective content moderation.

How does AI distinguish between harmful speech and legitimate free expression?

You know how AI distinguishes between harmful speech and legitimate free expression by analyzing language patterns, context, and user behavior.

It learns to recognize harmful content while preserving the right to express opinions freely.

What impact does AI moderation have on the mental health of human moderators?

AI moderation can help reduce the mental toll on human moderators by filtering out disturbing content.

However, constant exposure to harmful material can still impact mental health.

Balancing technology and human well-being is crucial.

Can users appeal against decisions made by AI content moderators, and how?

If you disagree with AI content moderators’ decisions, you can often appeal them. Look for appeal options on the platform.

Follow the provided guidelines to submit your appeal and provide relevant information for review.

Conclusion

In conclusion, AI plays a crucial role in content moderation and censorship, enabling platforms to efficiently filter inappropriate content while also raising concerns about potential biases and limitations.

As technology continues to evolve, it is essential for organizations to strike a balance between leveraging AI for enhanced moderation and ensuring transparency and accountability in decision-making processes.

Embracing the benefits of AI while addressing its challenges will be key in shaping the future of online content regulation.