ChatGPT made it easy for millions of people to quickly generate text that looked professional without needing to write it themselves. This quickly created a market for tools trying to detect if something was generated by ChatGPT. Teachers wanted to know if students were cheating and publishers wanted to know if writers were scamming them.

Today there are many tools out there, paid or free, calling themselves “AI detection tools” or “AI checkers”. All giving you some kind of metric or score after analysing the text. But how do they work and can they actually detect if a text is written by an AI or not?

Let’s look into this.

Do AI Detectors work?

First question is easy to answer. No, because they don’t work as “detectors”. But they can still be a useful tool in your business.

They are not “detectors” because there is nothing to detect. There is no watermark, thumbprint or DNA to “detect”. There is no “proof of AI”. There is just plain text. You can’t detect anything from the text. The only thing you can do is to compare the text with other texts.

AI detectors are not detectors; they are comparison tools.

Before looking at how you should use it, let’s look at how they work.

How do AI Detectors work?

The AI “detectors” look at the text and try to answer this question: “If an AI had been asked to write this type of text, could the output have looked something like this”?

To do that, they take advantage of how AI writing tools work.

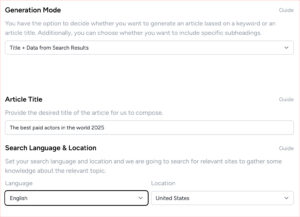

How do AI writing tools work?

AI writing tools, AI helpers and AI text generators use a technology called “Large Language Model” (LLM) to create text. A “Language Model” is a fancy word for “A list of mathematical probabilities”.

Basically it’s just a long list of word probabilities. For example, what is the probability that the next word in the sentence “May the force be…” will be “you”? (Answer: close to 100%).

This is basically the same thing you do in your head when thinking. You know the end of all these sentences:

- “May the force be with …”

- “Houston, we have a …”

- “Nobody puts Baby in a …”

“You”, “problem” and “corner” are the right answers. You knew that.

The Language Model does this for all word combinations (or to be more accurate, “token”-combinations, but that’s a bit too technical). To create a sentence, the LLM just goes word for word and checks the probabilities.

Here is what is called a “probability tree”.

(Image borrowed from Mohamad Aboufoul)

You can see that “parking” has a 30% probability and “drive” has a 70% probability. In this example, the Language Model will write “The car drove into the driveway” because that word order has the highest probability.

A Language Model is just a collection of such probabilities. It’s a big box full of numbers. No code. Just numbers.

A “Large” Language Model is a very very large collection of such probabilities. The latest version of ChatGPT-4 has 1.76 Trillion parameters (ChatGPT-3 “only” had 175 Billion). That’s a huge number. When it comes to AI, the bigger the better. The more stored probabilities, the better output.

If you want a deeper explanation and even more examples, please read Stephen Wolfram’s explanation on how ChatGPT works. It’s a good read.

Where does a Large Language Model get the probabilities?

The Language Model has learned these probabilities by reading a lot of text. This process is called “training” and requires a lot of text and computing time. All the text it reads (and re-reads multiple times) is called the “training dataset”. It is not publicly disclosed how big the dataset of ChatGPT is, but it estimates a range between 570GB and 45TB. Basically, it was trained on all text available online and in print – current and historic.

Most of the text in the training dataset was written by humans.

That part is important. This means that a Large Language Model is a model of how we humans write text (or to be more precise; “how we used to write text” up until now). The probabilities are there because that’s how humans write.

For example, this word order has a high probability:

“The car drove into the driveway.”

This word order has a lower probability:

“The driveway the car into drove”

Because that’s Yoda-speak, which is not commonly used in writing.

This word order has an even lower probability:

“Car The into driveway the drove”

That’s because that word order doesn’t even form a grammatically correct sentence. So that word order is not part of the training data set (remember, all that text written by humans). It has a low probability and thus will not be generated by a language model.

You can see this live in your smartphone when typing. The autocomplete function is based on the same technology. It will show you what the most probable ending of your sentence will be.

Now you know how AI text generators work. No magic included, just a lot of numbers.

Ok so back to the original question: How do AI Detectors work?

First, please remember to use the word “Comparison tool” in your head whenever you see the word “Detector”.

Everybody knows how an AI creates text, so we can look at a text and ask “does this look like a text an AI would write?”

Since an AI uses probabilities to generate text, an AI generated text will have word patterns with high probabilities. If it has a word order of high probability, for example “The car drove into the driveway.” instead of low probability word orders (“The driveway the car into drove”) then the text looks like a text that an AI would write.

If a text “looks like a text that an AI would write” the “AI detector” gives it a high “AI score”.

Another way of phrasing it is: If a text has many sentences with word orders of high probability, it gets a high “AI score”.

The important phrase here is: “looks like”. Remember, there is no DNA, fingerprint or watermark. Just text.

The text could also have been written by a human. A human who wrote it in the same way a machine would.

And the probability for that is high!

Remember all those texts that the AI was trained on? Those were written by humans. And those are the foundation of all those mathematical probabilities and calculations.

The more occurrences of a phrase in the training set, the higher probability. The higher probability, the higher “AI score” a “detector” will give it.

Famous movie quotes (“may the force be with you”) and famous political speeches (“I have a dream”) are very common in the training data set. So those word orders are deemed as high probability and will give the text a high AI score.

Just for fun you can try pasting the Declaration of Independence into a “detection tool” and see what it says. Very often it says that AI existed back in 1776.

Would you trust an advisor that told you that computers and advanced AI existed in 1776? I know I wouldn’t. I would not take business advice from that guy.

Remember, there is no DNA, there are no fingerprints, there is no watermark. There is no “proof of AI”. There is only text. We can only compare the text with other texts and then make some kind of judgement. This is what the “AI detectors” are trying to do. They are trying to do some kind of judgement by comparing it with other texts.

And as you can see with the example of the declaration of independence, it’s very easy to find examples of when that judgement is wrong.

“AI detectors” can’t “detect” when a text is written by an AI. Because a text that looks like an AI-text could also have been written by a human.

If you rely on “AI detection tools” alone, you will risk having too many false positives.

Another example: Hands in images

We all know that image generating AI, like Dall-E or Stable diffusion, are struggling with making images of hands. So much it even became a meme:

Does that mean that all images of “non-standard” hands are generated by AI?

Of course not!

Because having different types of hands is common in real life as well.

Here are some examples of some amazing paralympic swimming athletes.

Those photos are not generated by AI – despite the hands being different.

The same thing goes for text. Just because a text has the same style as an AI text, it does not mean it was written by an AI.

What AI detectors don’t look at

At Topcontent we use human writers, proofreaders and quality checkers to produce text.

The texts goes through a process that involves the following things (amongst others):

- Research

- Fact checking

- Spelling and grammar checks

- Checking that the text follows the instructions from the client

If we compare human output and AI output we get the following table.

| Action | Humans | AI |

| Research | Does research | Hallucinates and lies |

| Fact checking | Checks facts | Makes up its own facts |

| Spelling and grammar | Improves gradually | Perfect from start |

| Follow instructions | Follows instructions | Often ignore instructions |

| Speed | Takes time | Instant |

| Cost | Has a cost | Almost free |

None of the “AI detectors” we have tried look at these differences.

A good “AI detector” would check to see if the facts are wrong, if instructions are ignored, if spelling is perfect from the start and if the writing is done faster than normal.

AI detectors on the market don’t look at this. Because that is very hard to do.

So we had to build it ourselves. We have these checks built into our Topcontent writing app.

The “AI detection tools” on the market rely too much on grammar and spelling

Before building it ourselves we evaluated the existing ones on the market.

What we noticed when evaluating them is that they tend to look a lot at grammar and spelling. The better spelling and grammar the higher “AI Score”.

At Topcontent our writers create the text inside our system and we have saved every version of every text since 2016. This means that we have a lot of interesting data to test with. All created by humans.

In our search for a good “AI detection” we used different versions of the texts when testing, and we saw that the better the text became, the higher “AI score” it got. A text that gets a low “AI probability score” when it’s just a draft from a writer will get a higher score after it has been proofread and quality checked by a professional editor.

The “first draft” version of texts always gets a lower “AI score” than a proofread text.

Here is an example:

Score of the first draft (full of spelling mistakes):

Score after proofreading:

The changes were fixing grammatical errors and spelling mistakes. For example correctly writing “5,000 locations” instead of “5.000 locations”.

A better text suddenly became “AI”.

From the perspective of the “AI detector” this makes sense because humans are imperfect. Thus an imperfect text is probably written by a human.

Remember the sentence “Car The into driveway the drove”. It’s full of errors and thus has a low probability. So it will get a low “AI score”.

But would you like to have bad spelling and grammar on your website? Of course not!

If your team consists of highly professional and experienced writers, the chances of them scoring high as “AI” in an online tool is unfortunately very high.

So how can AI detection tools exist, if they don’t work?

Because people want to feel in control. Because people don’t want to be scammed.

The need is there.

And developers and entrepreneurs are trying to fulfil that need. And they are getting paid in ad revenue or subscriptions to try to do it. Every time you use an online “detection tool” somebody is getting paid.

But it gives a false sense of security.

When you should use “AI detectors”

As mentioned, we have a built-in “AI detector” in our system. So it would be a bit hypocritical of us to say that you shouldn’t use them.

There is a time and place for everything, and sometimes using an AI detector makes sense.

Let’s look at the differences between humans and machines again:

| Action | Humans | AI |

| Research | Does research | Hallucinates and lies |

| Fact checking | Checks facts | Makes up its own facts |

| Spelling and grammar | Improves gradually | Perfect from start |

| Follow instructions | Follows instructions | Often ignore instructions |

| Speed | Takes time | Instant |

| Cost | Has a cost | Almost free |

If an AI detector gives you a high “AI score” that is an indicator that you should investigate the other points in this table.

- Are the facts correct?

- Was the text delivered unusually fast?

- Do we see a gradual decrease of spelling mistakes?

- Was the price point extremely low?

For example, if a writer only delivers text that has a high AI score and does so at an impressive rate (more than 800 words / hour for more than 6 hours / day), then that is an indication of using AI. Do some spot checks to verify that the facts are correct. You will probably find a lot of factual errors.

You don’t want to have factual mistakes on your website. Don’t work with that writer.

How you should determine if a text is for you

You should not use an “AI detection tool” to determine if a text should or should not go on your website.

Instead, you should evaluate the text on its own.

When looking at a text you have received from a writer, ask yourself the following questions:

- Is the text factually correct?

- Is the text helpful for my visitors?

- Does it follow the SEO standards necessary to rank?

- Is the text good enough to represent my brand?

If the answer to all those questions is “yes”, then that is more important than an arbitrary score from a random tool you found online.

At Topcontent, we do our utmost to give you those types of texts.